Teacher Performance Data Measuring Student Growth

Unlike previous incentive programs based on achievement data, TIA requires districts to identify effective teachers using student growth data. Districts are not required to use STAAR data or other standardized assessments for the local designation system.

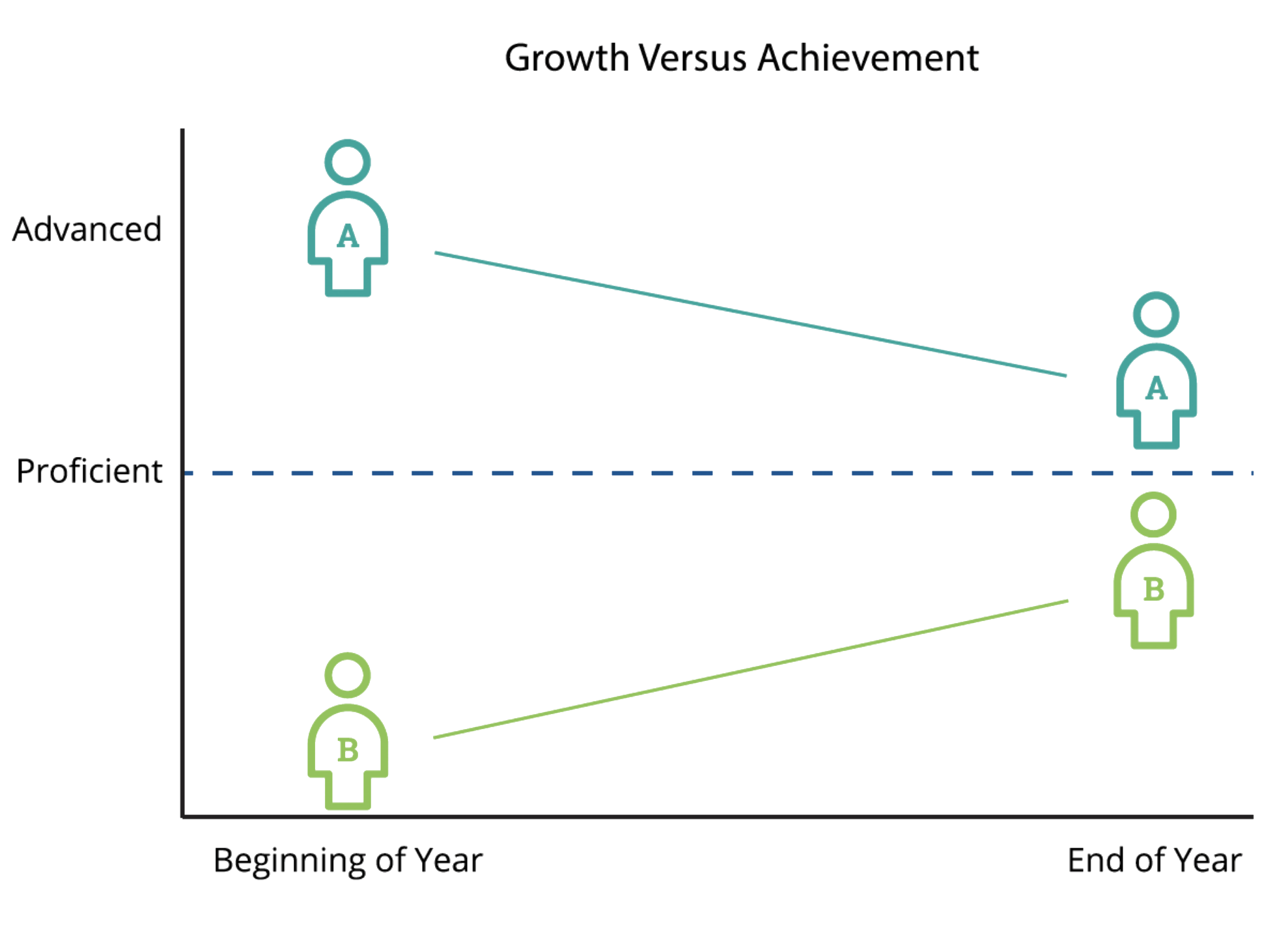

TIA performance standards for each designation level align with teacher effectiveness based on the teacher’s percentage of students who meet or exceed an expected growth target over the course of a single school year. Rather than using the magnitude of growth, effectiveness is measured by the impact teachers have on all students by setting growth at the individual student level. This method allows more equitable access to designation for effective teachers, regardless of their student population.

In this graph, student A starts the year Advanced in their growth measure and ends Proficient. While they still scored high enough for Achievement goals, they would not meet or exceed their student growth measure. Student B starts out the year less than Proficient and still ends the year less than Proficient. However, the student has moved closer to Proficient and therefore would meet or exceed their student growth measure.

Student Growth Performance Standards

Eligible Assessments Options for Student Growth Measures

Districts may choose from any of the four TIA recognized student growth measures, or a combination thereof, for each eligible teaching assignment:

- Student Learning Objectives

- Portfolios

- Pre-Tests and Post-Tests

- Value-Added Models

For example, a district may choose to use AP exams as the student growth measure for AP teachers, but use both Student Learning Objectives and portfolios as the student growth measures for Fine Arts teachers.

Student Learning Objectives

Student Learning Objectives

Student Learning Objectives (SLOs) focus on a foundational skill that is developed throughout the course curriculum and tailored to the context of individual students. SLOs measure student growth through a Body of Evidence with a minimum of five pieces of student work. Teachers set expected growth targets for each student. They evaluate their students individually using the Body of Evidence.

TIA requires district SLOs to align with all guidelines from TexasSLO.org.

Student Learning Objectives contain three phases:

Phase 1: Create the SLO

- Create a skill statement

- Create an Initial Skill Profile (ISP)

- Match current students to ISP

- Create a Targeted Skill Profile (TSP)

- Set expected growth targets for each student

Phase 2: Monitor Progress

- Monitor student work

- Define what counts as a quality task, assessment, or project

- Set a minimum of five or more data points

- Conduct Body of Evidence check-ins at mid-year with teacher and appraiser

Phase 3: Evaluate Success

- Evaluate student progress at end of year

- Ground student mastery levels to their Body of Evidence

- Require SLO evidence review as part of end of year teacher appraisal conference

Using the Student Growth Tracker, teachers regularly review each student’s Body of Evidence against the Targeted Skill Profile. At the end of the year, teachers work with their appraiser to determine which students met or exceeded their expected growth target, based on their respective Body of Evidence. Students who met or exceeded the expected growth target are then divided by the total number of students with a complete Body of Evidence. This provides each eligible teacher with a growth rating of percentage of students who met or exceeded expected growth.

Sample Exemplar ISP

Sample Exemplar TSP

texasslo.org

Student Learning Objectives

Portfolios

Using a collection of standards-aligned artifacts, portfolios assess student growth over the course of a year by measuring a student’s movement along a skill progression rubric.

Portfolios can be used in any course or content but are best suited for courses that have skill standards in creation and production, such as:

- Career and Technical Education

- Fine Arts/Performance Arts

- Early Childhood

- Special Education

With portfolios, students’ beginning of year skill levels are determined using a skill progression rubric and an expected growth target is set for the students’ end of year skill level that demonstrates movement along the skill progression rubric. An assessment of student work products is grounded in the specific skill details of the rubric. Best practice is to collect multiple artifacts valid and specific to the evaluated content. The type of artifact varies by content area, such as audio and video of a student musical, choir, or theatrical performance; student artwork either scanned digitally, submitted as a hard copy, or both; or student-created products such as welding or woodwork.

TIA requires that the district portfolio process:

- Demonstrates student work aligned to the standards of the course

- Demonstrates mastery of standards

- Utilizes a skills proficiency rubric with at least four different skill levels

- Includes criteria for scoring various artifacts

- Includes more than one artifact

Portfolio Overview and Resources

Portfolio Planning Worksheet

Portfolio Resources for Implementation

Pre-Tests and Post-Tests

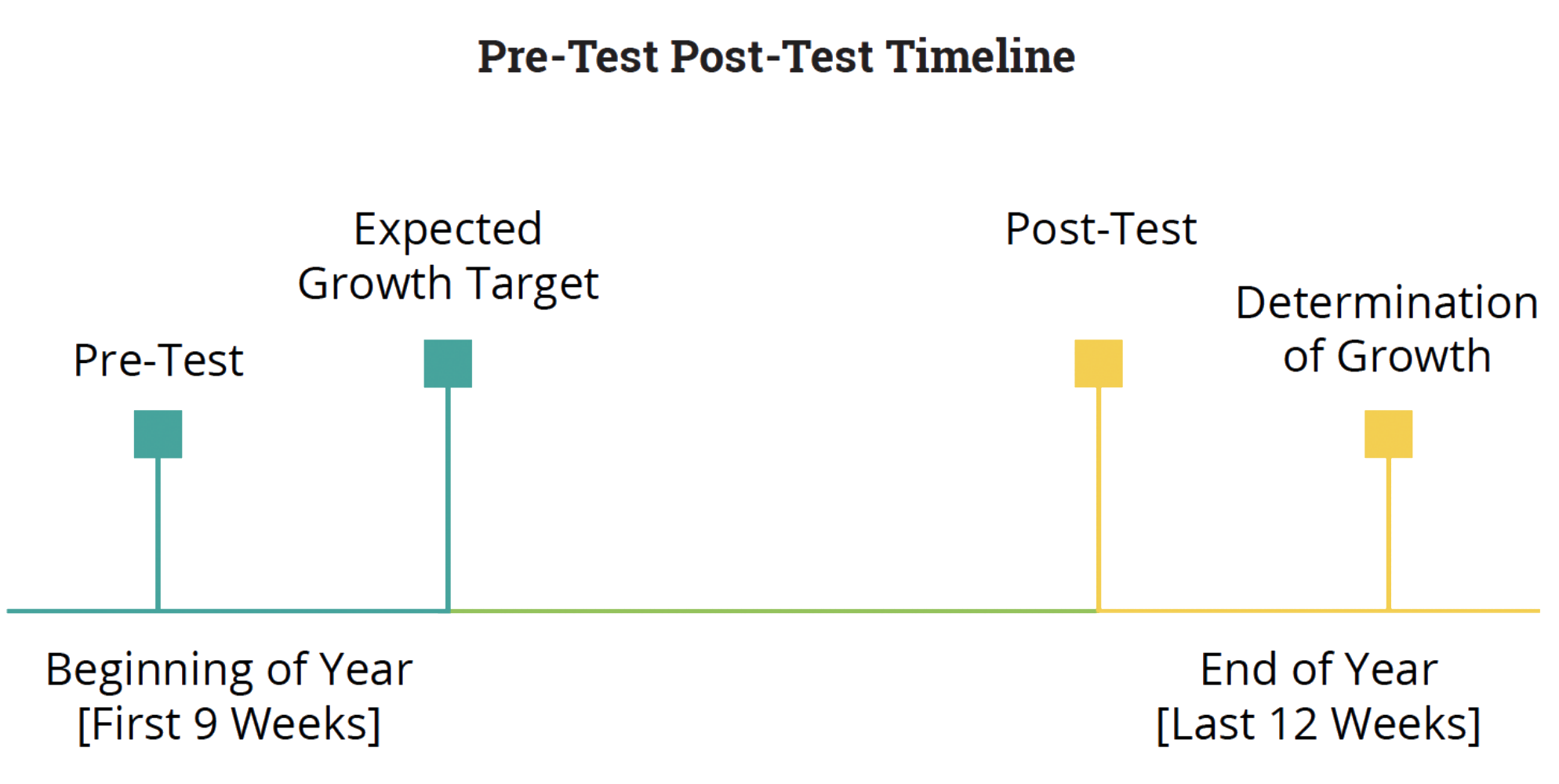

Pre-tests and post-tests involve the administration of a beginning of year (BOY) pre-test, administered within the first 9 weeks of the school year, and an end of year (EOY) post-test, administered during the last 12 weeks of the school year, both of which are aligned to the standards of the course. Districts must select or create pre-tests and post-tests aligned directly to the standards of the course in which the teacher is providing instruction.

Standards can be based on TEKS, the College Board AP standards (for AP courses), or other approved state or national standards such as National Council on the Teaching of Mathematics (NCTM) standards, American Council on the Teaching of Foreign Languages (ACTFL) standards, or CTE industry standards. The instrument must assess student proficiency in the standards of the course with questions that represent an appropriate level or range of levels of rigor for the course.

Districts can choose to use the expected growth targets that come with a third-party test (when available) or set expected growth targets locally at the district level. If using the expected growth targets from a third-party test, districts must ensure the third-party uses a valid and reliable method for calculating expected growth.

Pre-tests and post-tests must have a set administration window and standardized guidelines to ensure validity and reliability. All tests must be kept secure prior to administration, while testing is taking place, and during the scoring process. Annual training should be provided to all test administrators and proctors.

| Option | Pre-Test Creator | Who Sets Growth Target | Post-Test Creator | Examples |

|---|---|---|---|---|

| 1 | Third-Party | Third-Party | Third-Party | STAAR Transition Tables NWEA RIT Goals |

| 2 | Third-Party | District | Third-Party | Released STAAR Pre-Test District Growth Targets Spring STAAR Post-Test |

| 3 | District | District | District | District Pre-Test District Growth Targets District Post-Test |

| 4 | District | District | Third-Party | District Pre-Test from Item Bank District Growth Targets Spring iStation Post-Test |

Third-Party Assessment Options

Setting Expected Growth Targets Within Pre-test and Post-Test

Best Practices for Building Local Quality Assessments

Best Practices for Writing Local Quality Assessment Items

2024 STAAR Guidance for TIA Now Available

For all options, districts are required to ensure that each assessment:

- Aligns with the standards of the course tied to the eligible teacher

- Allows for setting an individual student growth target between the pre-test and the post-test

- Follows state and district guidelines for administration and scoring security

- Contains questions representing an appropriate level of rigor and range of question levels

- Accurately measures what is taught over the course of the year

Value-Added Models

Value-added measures (VAM) set predicted scores based on multiple years of historical testing data across multiple contents using statistical modeling. VAM is widely recognized as a valid and reliable method to determine student growth. It is based on an accurate underlying statistical model that predicts future performance based on past ability. In a VAM, when a student performs at, above, or below their expected score, it correlates with the teacher’s effectiveness.

Common assessments used with VAM include:

- STAAR

- NWEA MAP

- Iowa Tests

- SAT/PSAT

- ACT

- iStation

- mCLASS

A value-added model looks at how much progress students make from year to year. It compares the combination of a student’s current and prior assessments with a student’s achievement on a quality, normed assessment such as STAAR. By looking at a student’s prior data together with data from other students who have similar testing histories, a predicted or expected score can be calculated for that group of students with similar testing histories. Growth is calculated by looking at expected progress to actual progress of a student to see if more than, less than, or an expected amount of growth occurred. Details of the VAM process involve complicated statistical analyses that are often conducted by independent researchers.

VAM can be used with any nationally normed or criterion-referenced test. The assessment must meet three main criteria to be used in growth models:

- Sufficient scale stretch. The test can distinguish student performance for both high and low achieving students and differentiate growth across all achievement levels. The test must have questions at various difficulty levels to accurately discern a student’s ability, including those on the edges.

- Demonstrated relevance and validity. The test must align to state or national standards of what students are expected to know and do.

- Sufficient reliability. The assessment provides consistent results within and across administrations to make comparisons and establish a predictive relationship. The scales must be reliable from year to year.

Considerations How to Select Student Growth Measures

When selecting a growth measure for TIA, districts must consider the capacity of district and campus personnel to consistently implement each growth measure with fidelity across campuses and teaching assignments.

Key questions when discussing and selecting student growth measures for different teaching assignments include:

- What growth measures are best for each subject area/grade level?

- Is the district currently using any growth measures that align with full readiness on the System Application?

- How will the district set individual growth targets for each measure and track student progress?

- What role will teachers have in setting student growth goals?

- What is the current capacity for implementing different growth measures with fidelity?

Growth Measures Benefits & Challenges

| Growth Measure | Benefits | Possible Challenges |

|---|---|---|

| Student Learning Objectives | Can be used for all teaching assignments High teacher engagement Based on a body of student work | Training for all participating staff is required Appraiser is heavily involved Time required to evaluate the BOE |

| District-Created Pre-Tests and Post-Tests | Can be used for all teaching assignments Local control TEA issued guidance on setting expected growth targets, writing quality assessment items, and building quality assessments | Content and assessment design expertise required to build and approve assessment Requires multiple levels of review |

| Third-Party Created Pre-Tests and Post-Tests | Demonstrated validity and reliability Districts may already use third-party vendor tests | May not work for all content areas May require purchasing |

| Portfolio | Recommended for performance-based classes such as Fine Arts | Heavy planning at BOY Appraiser may be heavily involved |

| Value-Added Model | Demonstrated validity and reliability Statewide protocols for administration and scoring (if using STAAR) | Often requires contracting with a third-party |

Student Growth FAQs

How will TEA know if Student Learning Objectives (SLOs) are measuring growth effectively?

The application for a local designation system requires districts to explain in detail their procedures and protocols for SLO implementation, including procedures for setting student preparedness levels at the beginning of the year, protocols for collecting the body of evidence of student work, and rubrics/protocols used to approve SLOs at the end of the year. All districts must go through the data validation process before TEA determines full system approval.

Can the district utilize existing student growth measures for the local designation system?

Districts may begin by looking at the student growth measures already in place for each assignment and exploring which assignments may require a new or modified option.

The timeline for implementing new student growth measures is often a top consideration when determining eligible teaching assignments and readiness to apply for a local designation system. Districts can opt to start with teaching assignments which already have valid and reliable growth measures while exploring student growth measures for additional teaching assignments in subsequent years.

What student growth measures can be used for teachers in non-tested subjects?

Districts can use locally developed or third-party student growth measures, as long as they are valid and reliable. Examples include SLOs, pre- and post-tests, industry certification exams, and student portfolios. Districts may find the T-TESS Guidance on Student Growth Measures (PDF) helpful as they consider different student growth measures. For more information, visit texasslo.org.